A dumb CD solution with GitHub Webhooks and a shell script

I’ve been working on the backend of a school project (it will become public soon) for the last few weeks. It’s a Python 3.10 + FastAPI + psycopg3 API backed by a PostgreSQL DB. Our server is a plain Ubuntu 20.04 box on Hetzner and our deployment is as simple as a Python venv and a systemd service (with socket activation!). No Docker, no Kubernetes, no supervisord. We follow the KISS philosophy. To be perfectly honest, I wanted to install Arch on our server, but we agreed that Ubuntu is a bit more reliable.

We’re using GitHub Actions as a CI solution and it works well; it checks our code, builds a wheel and stores it as a build artifact. And something that I find really boring and time-consuming is manually downloading the wheel on our server and updating the Python venv. Wait, isn’t this problem commonly solved with ✨ CD ✨?

Ok, there are tons of complete CD solutions for containerized and advanced

workloads, using Docker, Kubernetes, AWS… But I’m a dumb idiot with some

decent scripting knowledge and no will to learn any of these technologies. How

can I start a script whenever CI builds a new wheel on master? Enter GitHub

Webhooks!

Basically GH will send a HTTP POST request to a chosen endpoint whenever certain

events happen. In particular, we are interested in the workflow_run event.

If you create a webhook on GitHub it will ask you for a secret to be used to

secure requests to your endpoint. Just choose a random string (openssl rand -hex 8) and write it down – it will be used in our script to check that

requests are actually signed by GitHub.

Who needs an HTTP library to process requests?

I decided to take a very simple approach: 1 successful CI build = 1 script run.

This means 1 webhook request = 1 script run. The simplest way that I came up

with to do this is with a systemd socket-activated Bash script. Everytime

systemd will receive a connection on a socket it will start a custom process

that will handle the connection: this is the way most

inetd-style daemons work.

UNIX history recap: (feel free to skip!) Traditional UNIX network daemons

(i.e., network services) would accept() connections on managed sockets and

then fork() a different process to handle each of these. With socket

activation (as implemented by inetd, xinetd or systemd) a single daemon (or

directly the init system!) listens on the appropriate ports for all services

on the machine and does the job of accepting connections. Each connection will

be handled by a different process, launched as a child of the socket manager.

This minimizes load if the service is not always busy, because there won’t be

any processes stuck waiting on sockets. Everytime a connection is closed the

corresponding process exits and the system remains in a clean state.

The socket manager is completely protocol-agnostic: it is up to the service-specific processes to implement the appropriate protocols. In our case, systemd will start our Bash script and pass the socket as file descriptors. This means that our Bash script will have to talk HTTP!1

How do we do this?

systemd and socket activation

Let’s start by configuring systemd. The systemd.socket man

page. We have to

create a socket unit and a corresponding service unit template. I’ll use

cd-handler as the unit name. I will setup systemd to listen on the UNIX domain

socket /run/myapp-cd.sock that you can point your reverse proxy (e.g. NGINX)

to. TCP port 8027 is mostly for debugging purposes - but if you don’t need HTTPS

you can use systemd’s socket directly as the webhook endpoint.2

Socket unit:

# /etc/systemd/system/cd-handler.socket

[Unit]

Description=Socket for CD webhooks

[Socket]

ListenStream=/run/myapp-cd.sock

ListenStream=0.0.0.0:8027

Accept=yes

[Install]

WantedBy=sockets.targetService template unit:

# /etc/systemd/system/cd-handler@.socket

[Unit]

Description=GitHub webhook handler for CD

Requires=cd-handler.socket

[Service]

Type=simple

ExecStart=/var/lib/cd/handle-webhook.sh # path to our Bash webhook handler

StandardInput=socket

StandardOutput=socket

StandardError=journal

TimeoutStopSec=5

[Install]

WantedBy=multi-user.targetAccept=yes will make systemd start a process for each connection. Create a

Bash script in /var/lib/cd/handle-webhook.sh; for now we will only answer

204 No Content to every possible request. Remember to make the script

executable (chmod +x). We will communicate with the socket using standard

streams (stdin/stdout; stderr will be sent to the system logs).

#!/bin/bash

# /var/lib/cd/handle-webhook.sh

printf "HTTP/1.1 204 No Content\r\n"

printf "Server: TauroServer CI/CD\r\n"

printf "Connection: close\r\n"

printf "\r\n"systemctl daemon-reload && systemctl enable --now cd-handler.socket and you

are ready to go. Test our dumb HTTP server with curl -vv http://127.0.0.1:8027

or if you’re using the awesome

HTTPie http -v :8027. If you’re

successfully receiving a 204, we have just ignored processed a HTTP request

with Bash ^^

Parsing the HTTP request

The anatomy of HTTP requests and responses is standardised in RFC 2616, in sections 5 and 6 respectively.

UNIX systems come with powerful text-processing utilities. Bash itself has parameter expansion features that will be useful in processing the HTTP request and we will use jq to extract the fields we’re interested in from the JSON payload.

We will build our script step by step. I’ll define a function to log data (in my

case, it will use systemd-cat to write directly to the journal; you can

substitute its body to adapt it to your needs) and another function to send a

response. send_response takes two parameters: the first one is the status code

followed by its description (e.g. 204 No Content) and the second one is the

response body (optionally empty). We’re using wc to count characters in the

body (we’re subtracting 1 for the extra \n that Bash sends to wc).

#!/bin/bash

set -euo pipefail # Good practice!

function log {

systemd-cat -t 'GitHub webhook handler' $@

}

function send_response {

printf "Sending response: %s\n" "$1" | log -p info

printf "HTTP/1.1 %s\r\n" "$1"

printf "Server: TauroServer CI/CD\r\n"

printf "Connection: close\r\n"

printf "Content-Type: application/octet-stream\r\n"

printf "Content-Length: $(($(wc -c <<<"$2") - 1))\r\n"

printf "\r\n"

printf '%s' "$2"

}If you add send_response "200 OK" "Hello World!" as a last line, you should be

able to get a 200 response with cURL or HTTPie! You can also test webhooks from

the GitHub web UI and see if an OK response is received as expected. We are not

sending the Date response headers that should be set according to RFC 2616.

Parsing the request line. As easy as read method path version. There will

probably be a pending \r on version but we don’t care this much (we will

assume HTTP/1.1 everywhere ^^).

Parsing headers. Headers follow immediately the request line. Expert bash

users would probably use an associative array to store request headers; we will

just use a case statement to extract headers we’re interested in. We split

each line on : by setting the special variable IFS, input field separator,

that defaults to a space. tr removes pending \r\n and brace substitution is

used to remove the space that follows : in each header line.

content_length=0

delivery_id=none

ghsig=none

event_type=none

while IFS=: read -r key val; do

[[ "$key" == "$(printf '\r\n')" ]] && break;

val=$(tr -d '\r\n' <<<"$val")

case "$key" in

"Content-Length")

content_length="${val# }"

;;

"X-GitHub-Delivery")

delivery_id="${val# }"

;;

"X-GitHub-Event")

event_type="${val# }"

;;

"X-Hub-Signature-256")

# This will be trimmed later when comparing to OpenSSL's HMAC

ghsig=$val

;;

*)

;;

esac

printf 'Header: %s: %s\n' "$key" "$val" | log -p debug

doneReading body and checking HMAC signature. GitHub sends a hex-encoded

HMAC-SHA-256 signature of the JSON body as the X-Hub-Signature-256

header, signed with the secret chosen while creating the

webhook. Without this layer of security, anyone could send a POST and trigger CD

scripts, maybe making us download malicious builds. In a shell script the

easiest way to calculate an HMAC is with the openssl command-line tool. We are

using dd to read an exact amount of bytes from stdin and the body is passed to

openssl with a pipe to avoid sending trailing newlines (using direct

redirection, i.e., <<< did not work for me). Brace expansion is used to split

strings. Place your WebHook secret in the GITHUB_WEBHOOK_SECRET variable and

set ENFORCE_HMAC to something different from 0 (I thought disabling signature

checking could be useful for debugging purposes). You can now play with

cURL/HTTPie/[insert your favourite HTTP client here] to see if you receive 401

Unauthorized responses as expected.

printf "Trying to read request content... %s bytes\n" "$content_length" | log -p info

content=$(dd bs=1 count="$content_length" 2> >(log -p debug) )

mysig=$(printf '%s' "$content" | openssl dgst -sha256 -hmac $GITHUB_WEBHOOK_SECRET)

if [[ "${mysig#* }" == "${ghsig#*=}" ]]; then

log -p notice <<<"HMAC signatures match, proceeding further."

else

log -p warning <<<"HMAC signatures do not match! Request is not authenticated!"

if [[ $ENFORCE_HMAC != 0 ]]; then

send_response "401 Unauthorized" "Provide signature as HTTP header."

log -p err <<<"Exiting now because HMAC signature enforcing is required."

exit 1

fi

fiSending the HTTP response. We will send an appropriate response to response to GitHub with the function defined earlier.

if [[ "$event_type" == "none" ]]; then

send_response "400 Bad Request" "Please provide event type as HTTP header."

log -p err <<<"X-GitHub-Event header was not provided."

exit 1

fi

if [[ "$delivery_id" == "none" ]]; then

send_response "400 Bad Request" "Please provide delivery ID as HTTP header."

log -p err <<<"X-GitHub-Delivery header was not provided."

exit 1

fi

printf "GitHub Delivery ID: %s\n" "$delivery_id" | log -p info

printf "GitHub Event type: %s\n" "$event_type" | log -p info

case "$event_type" in

"workflow_run")

send_response "200 OK" "Acknowledged workflow run!"

;;

*)

send_response "204 No Content" ""

exit 0

;;

esacThe “HTTP server” part of the script is complete! You can also test this by asking GitHub to resend past webhooks from the web UI.

Parsing the JSON body and downloading artifacts

JSON body. The JSON schema of GitHub webhooks payloads can be found on the

official GH docs. We will use jq to parse JSON and

with its -r flag we will print the fields we’re interested on standard output,

each on a separate line. Its stream can be passed to the read builtin with

IFS set to \n. The || true disjunction at the end of the command makes the

script continue with the execution even if jq doesn’t find some of the fields we

asked it to extract (e.g., in event that signal the start of a workflow,

artifacts_url is not present).

I want to run CD workflows only on the main branch (main, master, …), so I

added a check against the variable MAIN_BRANCH that you can configure at the

top of the script. GitHub sends workflow_run events even when CI workflows

start, but we’re only interested in running a custom action when a workflow

succeeds.

IFS=$'\n' read -r -d '' action branch workflow_status \

name conclusion url artifacts \

commit message author < <(

jq -r '.action,

.workflow_run.head_branch,

.workflow_run.status,

.workflow_run.name,

.workflow_run.conclusion,

.workflow_run.html_url,

.workflow_run.artifacts_url,

.workflow_run.head_commit.id,

.workflow_run.head_commit.message,

.workflow_run.head_commit.author.name' <<<"$content") || true

printf 'Workflow run "%s" %s! See %s\n' "$name" "$workflow_status" "$url" | log -p notice

printf 'Head commit SHA: %s\n' "$commit" | log -p info

printf 'Head commit message: %s\n' "$message" | log -p info

printf 'Commit author: %s\n' "$author" | log -p info

if [[ "$action" != "completed" ]] \

|| [[ "$conclusion" != "success" ]] \

|| [[ "$branch" != "$MAIN_BRANCH" ]];

then exit 0

fi

log -p notice <<<"Proceeding with continuous delivery!"Build artifacts. Before running the custom CD script that depends from your specific deployments scenario, we will download all artifacts build on GitHub Actions during CI. For example, in our Dart/Flutter webapp this could include the built website, with Dart already compiled to JavaScript. In the case of our Python backend the artifact is a Python wheel. This webhook script handler is completely language-agnostic though, meaning that you can use it with whatever language or build system you want.

We will download and extract all artifacts in a temporary directory and then

pass its path as an argument to the CD script, with a bunch of other useful

information such as the branch name and the commit SHA. The function

download_artifacts downloads and extracts the ZIP files stored on GitHub using

the Artifacts API. It iterates on the JSON array and

extracts appropriate fields using jq’s array access syntax. GitHub returns a 302

temporary redirect when it receives a GET on the archive_download_url advised

in the artifact body, so we use cURL’s -L to make it follow redirects.

function download_artifacts {

# $1: URL

# $2: directory to download artifacts to

pushd "$2" &>/dev/null

artifacts_payload=$(curl --no-progress-meter -u "$GITHUB_API_USER:$GITHUB_API_TOKEN" "$1" 2> >(log -p debug))

artifacts_amount=$(jq -r '.total_count' <<<"$artifacts_payload")

for i in $(seq 1 "$artifacts_amount"); do

printf 'Downloading artifact %s/%s...\n' "$i" "$artifacts_amount" | log -p info

name=$(jq -r ".artifacts[$((i - 1))].name" <<<"$artifacts_payload")

url=$(jq -r ".artifacts[$((i - 1))].archive_download_url" <<<"$artifacts_payload")

printf 'Artifact name: "%s" (downloading from %s)\n' "$name" "$url" | log -p info

tmpfile=$(mktemp)

printf 'Downloading ZIP to %s\n' "$tmpfile" | log -p debug

curl --no-progress-meter -L -u "$GITHUB_API_USER:$GITHUB_API_TOKEN" --output "$tmpfile" "$url" 2> >(log -p debug)

mkdir "$name"

printf 'Unzipping into %s...\n' "$2/$name" | log -p debug

unzip "$tmpfile" -d "$2/$name" | log -p debug

rm "$tmpfile"

done

popd &>/dev/null

}

artifacts_dir=$(mktemp -d)

printf 'Downloading artifacts to %s...\n' "$artifacts_dir" | log -p info

download_artifacts "$artifacts" "$artifacts_dir"Final step: running your CD script! If you aliased log to systemd-cat as I

did above, the -t flag will select a different identifier, to make the output

of the custom script stand out from the garbage of our webhook handler in the

system journal. Again, configure $CD_SCRIPT appropriately; it will be run from

the directory specified above in the systemd unit file and it will receive the

path to the directory containing the downloaded artifacts as an argument.

Note: it will run with root privileges unless specified otherwise in the

service unit file!

printf 'Running CD script!\n' | log -p notice

$CD_SCRIPT "$artifacts_dir" "$branch" "$commit" 2>&1 | log -t "CD script" -p infoFor example, our Python backend CD script looks something like this:

cd "$1"

systemctl stop my-awesome-backend.service

source /var/lib/my-backend/virtualenv/bin/activate

pip3 install --no-deps --force **/*.whl

systemctl start my-awesome-backend.serviceBonus points for cleanup :) Remove the tmpdir created earlier to store artifacts:

printf 'Deleting artifacts directory...\n' | log -p info

rm -r "$artifacts_dir"Conclusion

You can find the complete script here. It’s 158LoC, not that much, and it’s very flexible. There’s room for improvement; e.g., selecting different scripts on different branches. Let me know if you extend this script or use a similar approach!

At this point, you might be wondering whether it is worth it. It probably isn’t, and the simplest solution could be a 100LoC Python + FastAPI script to handle webhooks. But I really wanted to do this with basic UNIX tools.↩︎

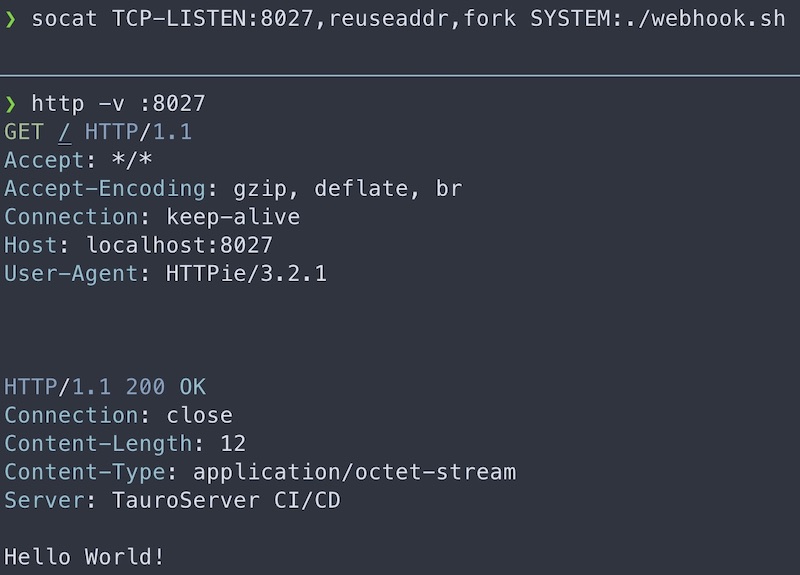

You can also use

socatto do what systemd is doing for us if you want to test your script on your local machine:socat TCP-LISTEN:8027,reuseaddr,fork SYSTEM:/path/to/handle-webhook.sh.↩︎